Modern laptops are quite expensive and hard to upgrade. While they are really handy to work in multiple places, they are not that efficient for advanced R&D activities like virtualization and massive software development combined (I/O speeds highly affects overall performance and compilation times). I was wondering if M2.NVM disk over PCI-E controller could work with my old desktop workstation giving additional performance benefit over standard onboard SATA attached SSD drive.

Old desktop PC only supports SATA drives. Switching to SATA SSD (electronics NAND Flash based Solid State Drive) in place of conventional HDD (mechanical drive) can boost I/O performance noticeably. We had 3.5″ (desktop) and 2.5″ (laptop) physical disk dimensions available so far. But new M2 SSD drives showed up, founding a new standard, with smaller form factor to be installed in embedded systems and lightweight laptops. M2 defines only a physical dimensions requirements, while disk itself can implement SATA (with average 500MB/s read/write speed) or NVM (with average 3000MB/s read/write speeds or better) interface.

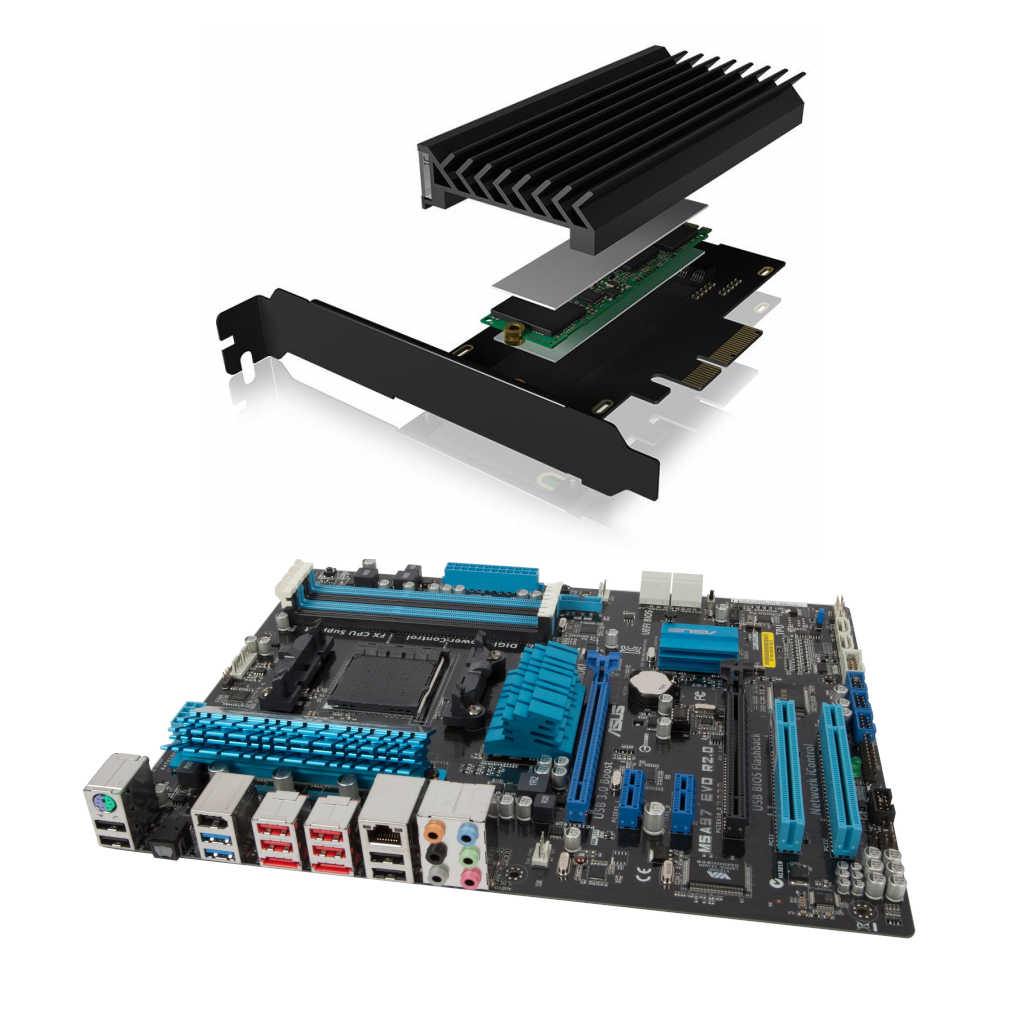

So I have decided to try M2.NVM Samsung SSD 980 1TB drive over ICYBOX IB-PCI224M2-ARGB PCI-E 4.0 controller on my ASUS M5A97 R2.0 (last available BIOS 2603) that only supports PCI-E 2.0. I have several internal and external SSD SATA drives both in 2.5″ and M2 form factor. Having even basic M2.NVM drive over PCI-E controller seems to be more future proof purchase, for the same amount of money, it will be ready for possible motherboard replacement, and in bay benefit in additional performance gain over onboard SATA attached SSD drive. Operating system is Open-Source FreeBSD Unix version 13-STABLE, filesystem is ZFS (testing done with no compression and no encryption).

It turns out that PCI-E 4.0 card can work on older PCI-E 2.0 bus (for sure speed will be degraded in that case). Mainboard BIOS does not see the NVM controlleri in UEFI mode (TODO) so it is not possible to boot out of it without additional SATA drive with the FreeBSD bootloader / kernel first (TODO). FreeBSD can see and utilize both PCI-E Controller and the M2.NVM drive with no problem. Let’s see the data transfer speed results in numbers.

Average in-filesystem StressDisk large file write speed results:

- Onboard SATA 2x WDEARS RED 2TB ZFS RAID0 STRIPE: 188MB/s.

- PCI-E ICYBOX M2.NVM Samsung SSD 980 1TB ZFS: 410MB/s.

DiskInfo single drive summary results:

- PCI-E ICYBOX M2.NVM Samsung SSD 980 1TB ZFS: O:1087557, M:1099090, I:1160432 kbytes/sec

- Onboard SATA 3.5″ 2TB 5400RPM HDD WD20EFRX (RED):O:146521, M:116847, I:70159 kbytes/sec

- Onboard SATA 3.5″ 2TB 5400RPM HDD WDEARS (GREEN): O:113811, M:87543, I:49209 kbytes/sec

- External USB3.1 M2.SATA SSD disk enclosure: O:31503, M:31850, I:31900 kbytes/sec.

As we can see above, from user perspective in out-of-the-box configuration write speeds are 218% faster on NVM SDD over HDD RAID for large files (this may need some filesystem tuning). Weighted average disk access for NVM SSD is 1 115 693 KB/s, WD20EFRX is 111 176 KB/s, WDEARS is 83 521 KB/s. It turns out that WD RED HDD disks are 133% faster than WD GREEN HDD disks, while NVM SSD disks are over 1000% faster than WD HDD RED disks. On the other hand external USB 3.1 M2.SATA SSD disk has average 47 626 KB/s results. One last but crucial test will be added here containing the onboard SATA SSD drive, just to make sure and prove NVM dominance over SATA.

DiskInfo result for single 3.5″ 2TB 5400RPM HDD WDEARS (GREEN Series) drive attached to onboard SATA controller:

# diskinfo -tv /dev/ada2

/dev/ada2

512 # sectorsize

2000398934016 # mediasize in bytes (1.8T)

3907029168 # mediasize in sectors

4096 # stripesize

0 # stripeoffset

3876021 # Cylinders according to firmware.

16 # Heads according to firmware.

63 # Sectors according to firmware.

WDC WD20EARS-00MVWB0 # Disk descr.

XXX # Disk ident.

ahcich4 # Attachment

No # TRIM/UNMAP support

Unknown # Rotation rate in RPM

Not_Zoned # Zone Mode

Seek times:

Full stroke: 250 iter in 7.135585 sec = 28.542 msec

Half stroke: 250 iter in 5.288793 sec = 21.155 msec

Quarter stroke: 500 iter in 7.540787 sec = 15.082 msec

Short forward: 400 iter in 3.036829 sec = 7.592 msec

Short backward: 400 iter in 2.956946 sec = 7.392 msec

Seq outer: 2048 iter in 0.254515 sec = 0.124 msec

Seq inner: 2048 iter in 0.199511 sec = 0.097 msec

Transfer rates:

outside: 102400 kbytes in 0.899735 sec = 113811 kbytes/sec

middle: 102400 kbytes in 1.169709 sec = 87543 kbytes/sec

inside: 102400 kbytes in 2.080911 sec = 49209 kbytes/sec

DiskInfo result for single 3.5″ 2TB 5400RPM HDD WD20EFRX (RED Series) drive attached to onboard SATA controller:

# diskinfo -tv /dev/ada0

/dev/ada0

512 # sectorsize

2000398934016 # mediasize in bytes (1.8T)

3907029168 # mediasize in sectors

4096 # stripesize

0 # stripeoffset

3876021 # Cylinders according to firmware.

16 # Heads according to firmware.

63 # Sectors according to firmware.

WDC WD20EFRX-68EUZN0 # Disk descr.

XXX # Disk ident.

ahcich0 # Attachment

No # TRIM/UNMAP support

5400 # Rotation rate in RPM

Not_Zoned # Zone Mode

Seek times:

Full stroke: 250 iter in 6.653120 sec = 26.612 msec

Half stroke: 250 iter in 5.172312 sec = 20.689 msec

Quarter stroke: 500 iter in 7.808667 sec = 15.617 msec

Short forward: 400 iter in 2.082164 sec = 5.205 msec

Short backward: 400 iter in 3.350534 sec = 8.376 msec

Seq outer: 2048 iter in 0.141464 sec = 0.069 msec

Seq inner: 2048 iter in 0.139262 sec = 0.068 msec

Transfer rates:

outside: 102400 kbytes in 0.698874 sec = 146521 kbytes/sec

middle: 102400 kbytes in 0.876360 sec = 116847 kbytes/sec

inside: 102400 kbytes in 1.459537 sec = 70159 kbytes/sec

DiskInfo result for single M2.NVM Samsung SSD 980 1TB drive attached to ICYBOX IB-PCI224M2-ARGB PCI-E 4.0 controller working on PCI-E 2.0 capable motherboard:

# diskinfo -tv /dev/nvd0

/dev/nvd0

512 # sectorsize

1000204886016 # mediasize in bytes (932G)

1953525168 # mediasize in sectors

4096 # stripesize

0 # stripeoffset

Samsung SSD 980 1TB # Disk descr.

XXX # Disk ident.

nvme0 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Seek times:

Full stroke: 250 iter in 0.008369 sec = 0.033 msec

Half stroke: 250 iter in 0.005510 sec = 0.022 msec

Quarter stroke: 500 iter in 0.014519 sec = 0.029 msec

Short forward: 400 iter in 0.010368 sec = 0.026 msec

Short backward: 400 iter in 0.020456 sec = 0.051 msec

Seq outer: 2048 iter in 0.032788 sec = 0.016 msec

Seq inner: 2048 iter in 0.032863 sec = 0.016 msec

Transfer rates:

outside: 102400 kbytes in 0.094156 sec = 1087557 kbytes/sec

middle: 102400 kbytes in 0.093168 sec = 1099090 kbytes/sec

inside: 102400 kbytes in 0.088243 sec = 1160432 kbytes/sec

DiskInfo resuls for USB 3.1 M2.SATA SSD disk enclosure:

# diskinfo -tv /dev/da0

/dev/da0

512 # sectorsize

256060514304 # mediasize in bytes (238G)

500118192 # mediasize in sectors

4096 # stripesize

0 # stripeoffset

31130 # Cylinders according to firmware.

255 # Heads according to firmware.

63 # Sectors according to firmware.

USB3.1 # Disk descr.

XXX # Disk ident.

umass-sim0 # Attachment

No # TRIM/UNMAP support

Unknown # Rotation rate in RPM

Not_Zoned # Zone Mode

Seek times:

Full stroke: 250 iter in 0.096377 sec = 0.386 msec

Half stroke: 250 iter in 0.099408 sec = 0.398 msec

Quarter stroke: 500 iter in 0.216023 sec = 0.432 msec

Short forward: 400 iter in 0.190744 sec = 0.477 msec

Short backward: 400 iter in 0.203053 sec = 0.508 msec

Seq outer: 2048 iter in 0.769551 sec = 0.376 msec

Seq inner: 2048 iter in 0.769675 sec = 0.376 msec

Transfer rates:

outside: 102400 kbytes in 3.250481 sec = 31503 kbytes/sec

middle: 102400 kbytes in 3.215029 sec = 31850 kbytes/sec

inside: 102400 kbytes in 3.210055 sec = 31900 kbytes/sec